Cloud Quickstart

CS Cloud Quickstart Guide

This guide will give you an introduction to using the Computer Science cloud, instructions on starting a simple kubernetes pod, basic networking concepts, and how to use storage. We will show you how to do things both in the web interface and through command line access.

Introduction

The CS cloud is a container-as-a-service resource. It allows you to run Docker based containers on our hardware. Containers allow you to encapsulate your application with all it's needed dependencies and allows the containerized application to run just about anywhere. The CS cloud is based on Kubernetes cluster which is a open-source container orchestration system. We also use Rancher as a UI and proxy interface.

Accessing the Cloud

You access the CS cloud through https://cloud.cs.vt.edu By default, all users get access to the Discovery cluster. This cluster is available for users to play with kubernetes, develop their containerized applications, and Techstaff to test updates to the system. Critical services should not be run on this cluster, we will have other clusters available to actually run containerized user services. You can also use the Rancher UI to control your own kubernetes cluster(s) allowing you to share your personal cluster with others in the department. This can even work behind a private network.

API/Command line access

You can also access the CS cloud via a command line tool called kubectl.

- Install the kubectl binary on your machine https://kubernetes.io/docs/tasks/tools/install-kubectl/

- You will need a config file to tell kubectl how to connect to the CS cloud, download this file from the Web interface

- Log into https://cloud.cs.vt.edu

- Select the cluster you want to access from the top left menu

- Click on the Cluster menu item at the top, if you are not already there

- Click on the Kubeconfig File button

- You can either download the file or copy and paste the content into your kubectl config file (normally ~/.kube/config on Linux)

- You can also directly access

kubectlcommand line directly from the web interface! Just click on the Launch kubectl button.

Creating a Project

When you first log into https://cloud.cs.vt.edu it will show you the clusters you have access to. Normally, this will only be the Discovery cluster. Also, you won't have any Projects unless someone has shared a Project with you. To get started, you will need to create a Project and a Namespace inside that project:

The Project will need to be created through the web interface.

- Select Discovery cluster by either clicking the name in the list, or selecting it from the top left menu (Global -> Cluster: discovery).

- Select Projects/Namespaces on the top menu

- Click on the Add Project button to create a Project

- Project Name is the only required field, this is set at the cluster scope so the name can conflict with other users' project names. I suggest pre-appending your username to the project name, for example:

mypid-project1 - After you create your project, it will take you to a screen that will allow you to add a namespace to your project.

- Namespaces allow you to group Pods and other resources together, this namespace translates to the Kubernetes namespace so again the names can conflict with other users. Example namespace name:

mypid-project1-dbfor grouping all of project1's database pods together. - Click on the Add Namespace button to create a Namespace

- Name is the only field needed

- Click on the Project's title or use the top left menu to select your new Project. This will take you to the Project's page.

- At this point you are ready to start a Pod

Starting a Deployment

Kubernetes groups containers into a Pod. A Pod can be thought of roughly as a single machine with a single IP on the Kubernetes network. Each container running in a Pod can communicate with each other via localhost. The simplest Pod is just a single container, which is what we will show you here. A Deployment is a kubernetes term for a Pod resource that can automatically scale out. For our example, we will only be starting a single Pod.

Example: Ubuntu shell

In this example, we will show how to start up a Ubuntu container and access it as a shell prompt.

Via Web Interface

- From the Workloads page, click on the Deploy button

- Give your deployment a Name, this name is unique to the Namespace

- Namespace should be automatically selected to the one you created earlier

- Docker Image can be any valid Docker URL, for this example:

ubuntu:bionic - You can leave the other options on the defaults for now, and click on Launch

- It will take a moment for the system to deploy your Workload, and it will give you a progress indicator that will turn solid green when ready.

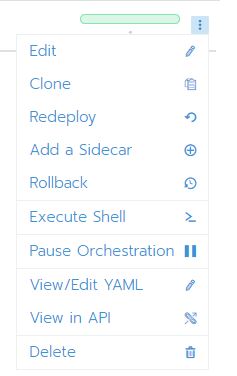

- Click on the ... menu icon far right of your Workload to access the menu

- Select Execute Shell to get an in-browser command line shell into your Ubuntu container

- From here, you can run commands like you would on a Ubuntu machine. Note: you have root level privileges on your container

Via Command Line

- This example assumes you have already installed and configured the

kubectlcommand - You can create and update resources in Kubernetes using manifests, manifests are text files that describe the resource and are in YAML format

- Here is an example manifest to create a simple pod, in this case a Ubuntu container. You will need to personalize metadata/namespace

apiVersion: v1

kind: Pod

metadata:

name: ubuntu-shell

namespace: user-project1-example

labels:

app: ubuntu

spec:

containers:

- name: ubuntu

image: ubuntu:bionic

stdin: true

tty: true

- Save this file as ubuntu-shell.yml

- Apply the manifest by running

kubectl apply -f ubuntu-shell.yml - When you run

kubectlyou need to tell it which Namespace to work in. You can either specific this with the-n <namespace>flag, or you can set the current namespace context by running:kubectl config set-context --current --namespace=<namespace>this will save you typing the namespace for every command. - You will need to wait for the Pod to be ready, you can check the status by running

kubectl get pods -n <namespace>It should only take a few moments for it to be ready. - Once your Pod is ready, you can access it's shell by running

kubectl exec -it ubuntu-shell -n <namespace> /bin/bash

Networking

The CS cloud automatically sets up access to the cluster network when you start a Pod. Your Pod gets a random internal IPv4 address that can access other Pods in the cluster network, and the Internet via a gateway. There are also options to access your Pod(s) from external hosts.

Services

Since your Pods can come and go as needed, their IP is going to change often. Kubernetes offers a resource called a Service that will allow you to consistently access a Pod or set of Pods by name. You can think of this as a dynamic DNS service. In the Rancher web interface, a service entry will get generated automatically for you anytime you add a Port Mapping. A good example is creating a database service that can be used by your other Pods in the project:

Via Web Interface

This example will create a MongoDB Pod and Service entry

- From the Workloads Page, click on Deploy

- Set Name to

database - Set Docker Image to

mongo - Make sure Namespace is set

- Click on the Add Port button

- Set Publish the container port to

27017 - Set As a to

Cluster IP (Internal only)-- This setting will make the service only accessible through the internal Kubernetes network - Click on Launch

- After just a moment, you will have a MongoDB instance running that can be used by other Pods in your Project. Note: this is for testing only, since any data will be lost when the Pod is killed (we will cover this later...)

- Click on the Service Discovery menu item

- You will see that Rancher has automatically created a database service entry for you

- The database will be accessible from other Pods as the hostname:

databasewhich is a shorten alias fordatabase.<namespace>.srv.cluster.local

Via Command Line

Creating the database example with command line is a two step process, but both steps can be combined into a single file.

- Create a

database.ymlfile, you will need to personalize namespace:

apiVersion: v1

kind: Pod

metadata:

name: database

namespace: user-project1-db

labels:

app: database

spec:

containers:

- name: database

image: mongo

stdin: true

tty: true

---

apiVersion: v1

kind: Service

metadata:

name: database

namespace: user-project1-db

spec:

ports:

- name: tcp27017

port: 27017

protocol: TCP

targetPort: 27017

selector:

app: database

type: ClusterIP

- This manifest will do two things: Create a Pod running the MongoDB Docker image labeled with app: database and Create an internal Service entry that points to any Pod labeled app: database

- Apply this manifest to create the resources:

kubectl apply -f database.yml - To get the status of your Pod(s):

kubectl get pod -n <namespace> - To get the status of your Service(s):

kubectl get service -n <namespace>

Externally Accessible Services

The CS cloud offers options for externally accessing your services. This will vary based on which cluster you are using. This example will be using the Discovery cluster. There are two different ways to expose your service externally: Ingress or load balancer. The recommended way to expose web based services is using an ingress. A load balancer might be necessary for non-HTTP protocols such as database.

Ingress

An ingress service uses a proxy (nginx) to map URLs to your container. This is the preferred way to expose services. A DNS entry pointing to the proxy needs to exist before the ingress will work. The Discovery cluster already has the hostname discovery.cs.vt.edu assigned. You can use this ingress hostname, but the URL namespace will be shared with all other users. If you need additional hostnames, you will need to contact the CS Techstaff. Encryption is automatically configured applied to ingresses.

Via Web Interface

- From the Workloads page, click on the Deploy button

- Fill in Name with

web-example - Fill in Docker Image with

rancher/hello-worldThis is a simple web page docker image provided by Rancher. - Make sure that Namespace is filled in

- Click on Launch

- Click on Load Balancing tab

- Click on Add Ingress button

- Fill in Name with

web-example-ingress - Select Specify a hostname to use

- Fill in Request Host with

example.discovery.cs.vt.edu - Fill in Path with

/web-example - Make sure that Namespace is filled in

- Select web-example from Target drop-down list

- Fill in Port with

80 - Click on Save button

- It can take up to a couple of minutes for the CS cloud system to fully initialize your ingress.

- The web interface will add a /web-example link under your web-example name that points to your external service.

- Visit the link to view your service

Via Command Line

- Create a

web-example.ymlfile, you will need personalize namespace:

apiVersion: v1

kind: Pod

metadata:

name: web-example

namespace: user-project1-web

labels:

app: web-example

spec:

containers:

- name: web-example

image: rancher/hello-world

stdin: true

tty: true

---

apiVersion: v1

kind: Service

metadata:

name: web-example-service

namespace: user-project1-web

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: web-example

type: ClusterIP

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-example-ingress

namespace: user-project1-web

spec:

rules:

- host: example.discovery.cs.vt.edu

http:

paths:

- backend:

serviceName: web-example-service

servicePort: 80

path: /web-example

- This manifest will do three things: Create a Pod running the hello-world Docker image labeled with app: web-example, create an internal Service entry that points to any Pod labeled app: web-example, and create an ingress that points to the internal Service entry.

- Apply this manifest to create the resources:

kubectl apply -f web-example.yml - To get the status of your Pod(s):

kubectl get pod -n <namespace> - To get the status of your Service(s):

kubectl get ingress -n <namespace>

Load Balancer

By default, when you create load balancer service in the Discovery cluster, you will get a randomly assigned external IPv6 address. There are other options available, but they will be reserved for special use cases. Contact Techstaff if you have a special network use case. In this example, we will create a simple web page that will be externally accessible via IPv6:

Via Web Interface

- From the Workloads page, click on the Deploy button

- Fill in Name with

web-example - Fill in Docker Image with

rancher/hello-worldThis is a simple web page docker image provided by Rancher. - Make sure that Namespace is filled in

- Click on the Add Port button

- Fill in Publish the container port with

80 - Select As a to

Layer-4 Load Balancer - Fill in On listening port with

80 - Click on Launch

- It can take up to a couple of minutes for the CS cloud system to fully initialize your external service access.

- The web interface will add a 80/tcp link under your web-example name that points to your external service.

- Visit the 80/tcp link to view your service

- Extra: to see the load balancing in action:

- Go back to the Workloads page, and click on the arrow left of your web-example name

- Click on the + to increase the number of Pods in your Deployment.

- Again visit your external service, once the Load Balancer picks up the changes you will be able to load the page multiple times and see it hit the different Pods.

Via Command Line

- Create a

web-example.ymlfile, you will need personalize namespace:

apiVersion: v1

kind: Pod

metadata:

name: web-example

namespace: user-project1-web

labels:

app: web-example

spec:

containers:

- name: web-example

image: rancher/hello-world

stdin: true

tty: true

---

apiVersion: v1

kind: Service

metadata:

name: web-example

namespace: user-project1-web

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: web-example

type: LoadBalancer

- This manifest will do two things: Create a Pod running the hello-world Docker image labeled with app: web-example and Create an external Service entry that points to any Pod labeled app: web-example

- Apply this manifest to create the resources:

kubectl apply -f web-example.yml - To get the status of your Pod(s):

kubectl get pod -n <namespace> - To get the status of your Service(s):

kubectl get service -n <namespace> - You should see the external IP of web-example service

Storage

Docker containers are ephemeral, and any changes you make to them will be lost when they are deleted or replaced. Kubernetes offers options to have your changes survive a Pod restart. I will go over a couple of the most common ones here.

Configmaps

Kubernetes offers a key/value store available to your Pods. This is a great way to create configuration files that can be easily updated without having to re-create your whole Docker image. The value can then be mapped to a filename within your Pod(s). As a visual representation of this, the example will create a Nginx Pod with a configmap as the default page.

Via Web Interface

- From the Project page, select Resources->Config Maps from the menu at the top

- Click on the Add Config Map button

- Fill in Name with

default-html - Make sure Namespace is filled in

- Fill in Key with

index.html - Fill in Value with

<html><body>Hello World</body></html> - Click on Save

- From the Workloads page, click on Deploy

- Fill in Name with

configmap-example - Fill in 'Docker Image with

nginx - Make sure Namespace is filled in

- Click on the Add Port button

- Fill in Publish the container port with

80 - Select in As a to Layer-4 Load Balancer

- Fill in On listening port with

80 - Expand the Volumes section below

- Click on the Add Volume... button, and select Use a config map

- Select default-html from the Config Map Name drop down list

- Fill in Mount Point with

/usr/share/nginx/html - Fill in Default Mode with

644 - Click on Launch

- Once your Pod is ready and accessible, you will notice that Nginx is serving out the "Hello World" contents of your Config Map. You can now update the contents of your Config Map and see the change to your website. Your changes will survive any Pod restarts.

Via Command Line

- Create a

configmap-example.ymlfile, you will need personalize namespace:

apiVersion: v1

data:

index.html: <html><body>Hello World</body></html>

kind: ConfigMap

metadata:

name: default-html

namespace: user-project1-web

---

apiVersion: v1

kind: Pod

metadata:

name: configmap-example

namespace: user-project1-web

labels:

app: confimap-example

spec:

containers:

- name: configmap-example

image: nginx

stdin: true

tty: true

volumeMounts:

- mountPath: /usr/share/nginx/html

name: vol1

volumes:

- configMap:

defaultMode: 0644

name: default-html

optional: false

name: vol1

---

apiVersion: v1

kind: Service

metadata:

name: configmap-example

namespace: user-project1-web

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: web-example

type: LoadBalancer

- This manifest will do three things: Create/update a Config Map with a simple index.html file, Create a Pod running Nginx Docker image with the Config Map mounted as a volume, and Create an external Service entry that points to the Pod

- Apply this manifest to create the resources:

kubectl apply -f configmap-example.yml - To view the content of your Config Map:

kubectl get configmap default-html -n <namespace> -o yaml - To get the status of your Pod(s):

kubectl get pod -n <namespace> - To get the status of your Service(s):

kubectl get service -n <namespace> - Visit the external IP of your service to see that Nginx is serving out the simple "Hello World" file you created in the Config Map.

- You can edit the Config Map with:

kubectl edit configmap default-html -n <namespace>

Persistent Volumes

Kubernetes also lets you mount a filesystem as a persistent volume, this is used when your application needs to store a lot of data or rapidly changing data such as a database. The CS cloud offers on-demand persistent volumes through Kubernetes Storage Class. Each cluster will handle persistent volumes differently. This example is using the Discovery cluster. For this example, we will create a mysql Pod that stores it's data in a persistent volume.

Via Web Interface

- From the Workloads Page, click on the Deploy button

- Fill in Name with

mysql-example - Fill in Docker Image with

mysql - Make sure Namespace is filled in

- Expand Environment Variables

- Click on the Add Variable button

- Fill in Variable with

MYSQL_ROOT_PASSWORD - Fill in Value with

ChangeMe - Expand Volumes near the bottom

- Click on the Add Volume... button and select Add a new persistent volume (claim)

- Fill in Name with

mysql-example - Make sure Use a Storage Class to provision a new persistent volume and Use the default class are selected

- Fill in Capacity with

5 - Click on the Define button

- Fill in Mount Point with

/var/lib/mysql - Fill in Sub Path in Volume with

dbThis is needed because mysql requires an empty directory. - Click on the Launch button at the bottom

- It will take a few moments for the system to allocate the Volume and create the Pod

- You will now have a working MySQL DB that can be restarted or upgraded easily

Via Command Line

- Create a

mysql-example.ymlfile, you will need personalize namespace:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-example

namespace: user-project1-web

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: default

---

apiVersion: v1

kind: Pod

metadata:

name: mysql-example

namespace: user-project1-web

labels:

app: mysql-example

spec:

containers:

- name: mysql-example

image: mysql

stdin: true

tty: true

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql-example

subPath: db

env:

- name: MYSQL_ROOT_PASSWORD

value: ChangeMe

volumes:

- name: mysql-example

persistentVolumeClaim:

claimName: mysql-example

---

apiVersion: v1

kind: Service

metadata:

name: mysql-example

namespace: user-project1-web

spec:

ports:

- name: tcp3306

port: 3306

protocol: TCP

targetPort: 3306

selector:

app: mysql-example

type: ClusterIP

- This manifest will do three things: Create/update a Persistent Volume claim, Create a Pod running mysql Docker image with the Persistent Volume mounted, Create a Service entry that points to the mysql instance internally

- Apply this manifest to create the resources:

kubectl apply -f mysql-example.yml - To view the status of your Persistent Volume Claim:

kubectl get pvc -n <namespace> - To get the status of your Pod(s):

kubectl get pod -n <namespace> - To get the status of your Service(s):

kubectl get service -n <namespace>

Summary

Computing, Networking, and Storage are the basic components to hosting any computer service. The CS cloud, based on Kubernetes, lets you quickly and easily manage these components and compose them into your own service.

Learn More

Here are some links to learn more about CS Cloud, Kubernetes, and Docker:

- Advanced CS Cloud examples

- Real World Example using CS Cloud

- Use CS Docker Image Registry

- Kubernetes Basics: https://kubernetes.io/docs/tutorials/kubernetes-basics/

- Docker Hub (find just about any application already packaged to use with Docker): https://hub.docker.com/

- Learn about creating your own Docker images: https://docs.docker.com/get-started/