Cloud Example Jupyter: Difference between revisions

No edit summary |

No edit summary |

||

| Line 9: | Line 9: | ||

[[File:Jupyter1.JPG]] | [[File:Jupyter1.JPG]] | ||

'''Details of each ''deployment:''''' | '''Details of each ''[https://rancher.com/docs/rancher/v2.x/en/k8s-in-rancher/workloads/deploy-workloads/ deployment]:''''' | ||

==== continuous-image-puller ==== | ==== continuous-image-puller ==== | ||

This ''[https://rancher.com/docs/rancher/v2.x/en/k8s-in-rancher/workloads/deploy-workloads/ deployment]'': | This ''[https://rancher.com/docs/rancher/v2.x/en/k8s-in-rancher/workloads/deploy-workloads/ deployment]'': | ||

* Runs a copy of itself on all ''[https://kubernetes.io/docs/concepts/architecture/nodes/ physical nodes]'' of the cluster. This is called a '' | * Runs a copy of itself on all ''[https://kubernetes.io/docs/concepts/architecture/nodes/ physical nodes]'' of the cluster. This is called a ''[https://kubernetes.io/docs/concepts/workloads/controllers/daemonset/ DaemonSet]'' by kubernetes. | ||

* The purpose of this ''deployment'' is to pull the single-user | * The purpose of this ''[https://rancher.com/docs/rancher/v2.x/en/k8s-in-rancher/workloads/deploy-workloads/ deployment]'' is to pull the image(s) need to run the single-user Jupyter Notebook and automatically on any new nodes to the cluster -- basically pre-caching the images. The process of downloading the images the first time it is run on a node can take some time and detract from the user experience. | ||

* The ''[https://kubernetes.io/docs/concepts/containers/ containers]'' in ''[https://rancher.com/docs/rancher/v2.x/en/k8s-in-rancher/workloads/deploy-workloads/ deployment]:'' are: | |||

** The primary image that you can see is '''gcr.io/google_containers/pause:3.0''' This is just a simple lightweight container that doesn't do any processing, just to keep ''[https://rancher.com/docs/rancher/v2.x/en/k8s-in-rancher/workloads/deploy-workloads/ deployment]'' alive | |||

** The other two images are the single-user Jupyter Notebook and a networking tools image (updates iptables). Both of these images are run with a simple echo log command, so again they don't do any processing but the image is downloaded and cached by kubernetes | |||

Revision as of 13:44, 16 August 2019

Work in progress

Introduction

The goal of this project is to support a class teaching basic programming using Jupyter Notebook Jupyter notebook is a single process that supports only one person. To support a whole class, a jupyter notebook process will need to be run for each student. Jupyter offers a Jupyter Hub that automatically spawns these singe user processes. However, a single machine can only support around 50-70 students before suffering performance issues. Kubernetes allows this process to scale out horizontally by spreading the single-user instances across physical nodes. I will give a break down of different pieces needed to make this work and go into more detail on certain aspects.

Basics

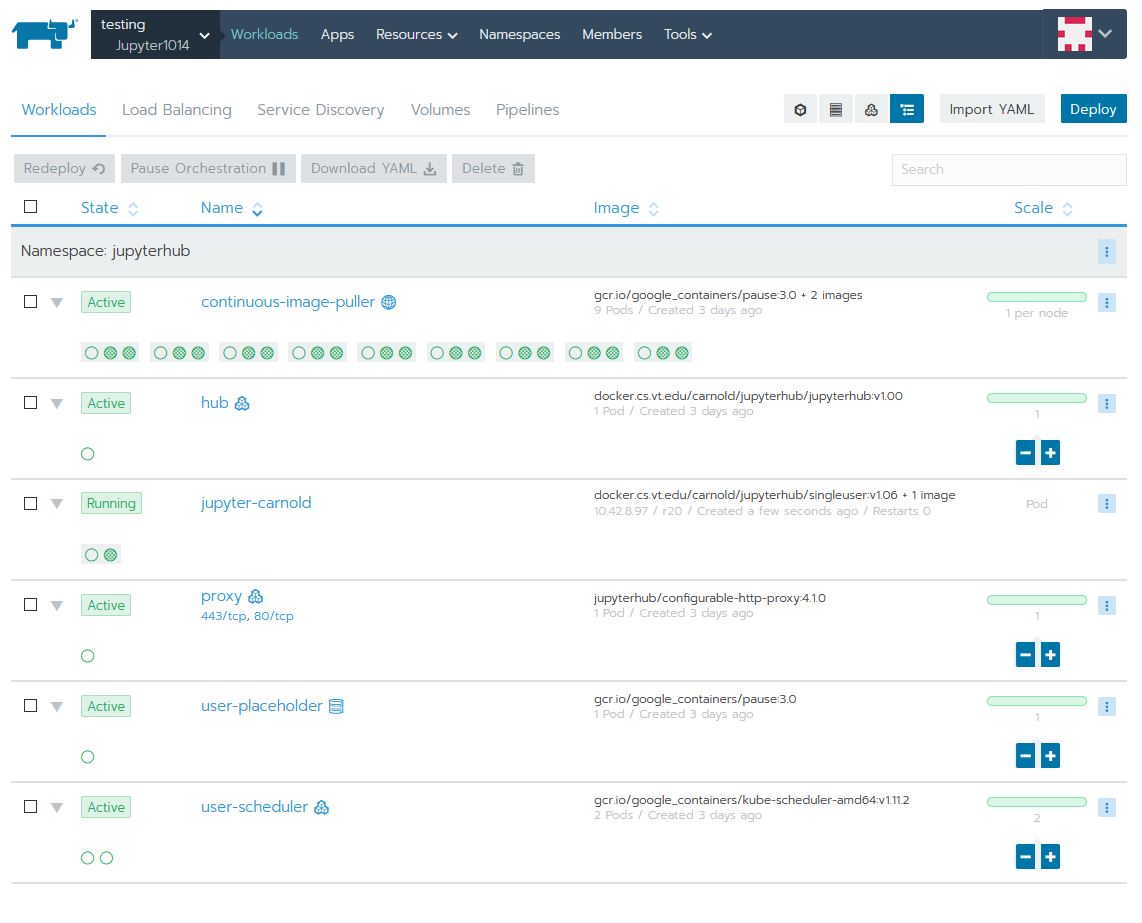

Here is what the Workloads tab looks like:

Details of each deployment:

continuous-image-puller

This deployment:

- Runs a copy of itself on all physical nodes of the cluster. This is called a DaemonSet by kubernetes.

- The purpose of this deployment is to pull the image(s) need to run the single-user Jupyter Notebook and automatically on any new nodes to the cluster -- basically pre-caching the images. The process of downloading the images the first time it is run on a node can take some time and detract from the user experience.

- The containers in deployment: are:

- The primary image that you can see is gcr.io/google_containers/pause:3.0 This is just a simple lightweight container that doesn't do any processing, just to keep deployment alive

- The other two images are the single-user Jupyter Notebook and a networking tools image (updates iptables). Both of these images are run with a simple echo log command, so again they don't do any processing but the image is downloaded and cached by kubernetes