Cloud Example Jupyter

Work in progress

Introduction

The goal of this project is to support a class teaching basic programming using Jupyter Notebook Jupyter notebook is a single process that supports only one person. To support a whole class, a jupyter notebook process will need to be run for each student. Jupyter offers a Jupyter Hub that automatically spawns these singe user processes. However, a single machine can only support around 50-70 students before suffering performance issues. Kubernetes allows this process to scale out horizontally by spreading the single-user instances across physical nodes. I will give a break down of different pieces needed to make this work and go into more detail on certain aspects.

Workloads

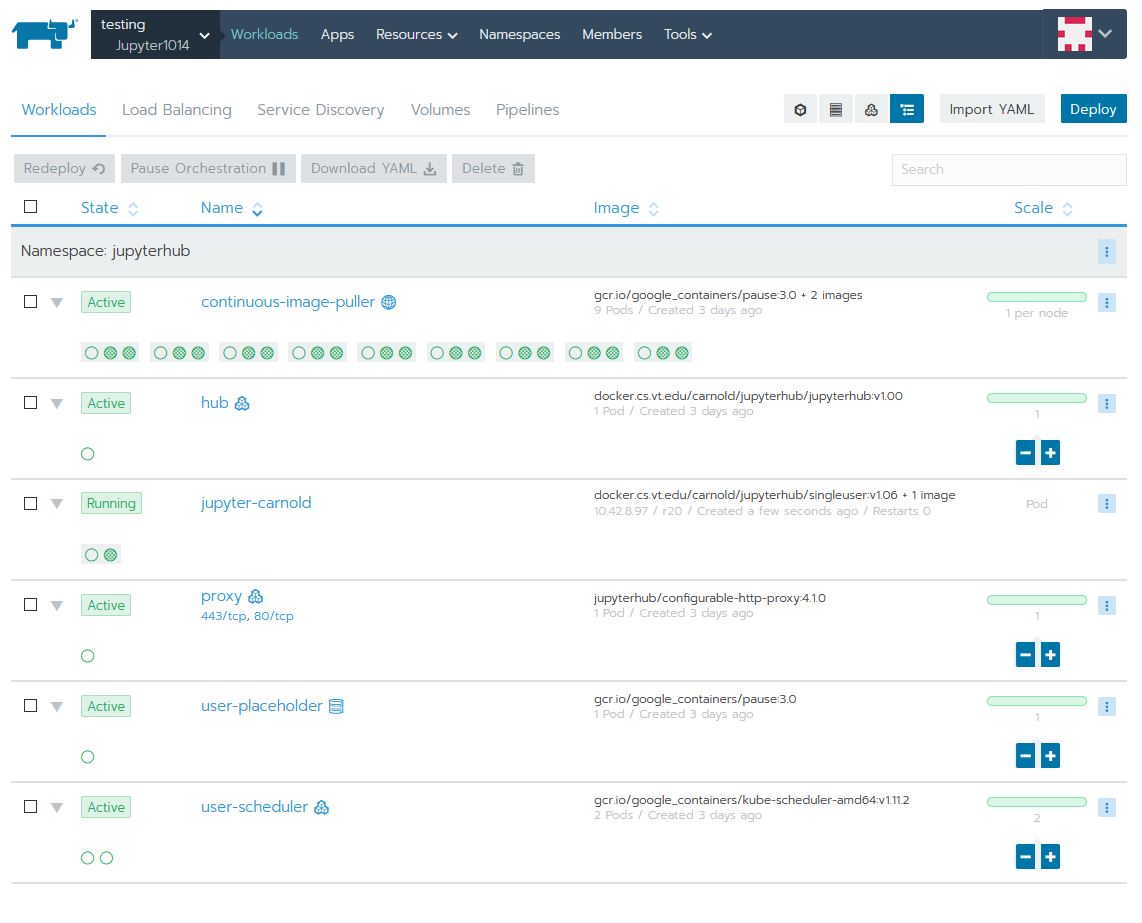

Here is what the Workloads tab looks like:

Details of each deployment:

continuous-image-puller

This deployment:

- Starts a pod on all physical nodes of the cluster. This is called a DaemonSet by kubernetes.

- The purpose of this deployment is to pull the image(s) need to run the single-user Jupyter Notebook and automatically download on any new nodes to the cluster -- basically pre-caching the images. The process of downloading the images the first time it is run on a node can take some time and detract from the user experience.

- The containers in each pod are:

- The primary image that you can see is gcr.io/google_containers/pause:3.0 This is just a simple lightweight container that doesn't do any processing, just to keep the deployment alive

- The other two images are the single-user Jupyter Notebook and a networking tools image (updates iptables). Both of these images are run with a simple echo log command, so again they don't do any processing but the image is downloaded and cached by kubernetes

hub

This deployment:

- Scalable, currently running a single pod

- The purpose of this deployment is to run the JupyterHub process that authenticates the user, configures the proxy, and calls the spawner to create a single-user instance of Jupyter for the user.

- The only container in the pod is docker.cs.vt.edu/carnold/jupyterhub/jupyterhub:v1.00 This is a customized version of the jupyterhub docker image to add the CAS authentication plug-in.

jupyter-carnold

This deployment:

- Runs a single pod

- This is an example of a pod automatically spawned for the user carnold and hosts their single-user instance of Jupyter Notebook

- The containers in this pod are:

- The primary image is docker.cs.vt.edu/carnold/jupyterhub/singleuser:v1.06 This is a customized verison of the jupyter notebook single user docker image to add a custom script that runs on start up.

proxy

This deployment:

- Scalable, currently running a single pod

- The purpose of this deployment is to run Nginx as a reverse proxy redirecting users to their single-user instances of Jupyter Notebook. The proxies get configured by the hub deployment.

- The only container in this pod is jupyterhub/configurable-http-proxy:4.1.0 This is based on Nginx.

- There is an external IP mapped to this service with ports 443 and 80 forwarding to this pod. This allows connections from outside the cluster.

user-placeholder

This deployment:

- Scalable, currently running a single pod

- The purpose of this deployment is to hold a spot (or multiple spots) on the cluster for single-user instances. This effectively reserves the resources (memory and cpu requests) necessary to run a single-user instance of Jupyter Notebook. In theory, you could scale this up to reserve as many places as you need.

- The only container in this pod is gcr.io/google_containers/pause:3.0 Again, this image doesn't do any processing, but keeps the instance alive.

user-scheduler

This deployment:

- Scalable, currently running two pods for redundancy

- The purpose of this deployment is to schedule and spawn the single-user instances of Jupyter Notebook.

- The only container in these pods is gcr.io/google_containers/kube-scheduler-amd64:v1.11.2 This is a complex process, and the image is created by Kubernetes itself. Find out more at: https://kubernetes.io/docs/reference/command-line-tools-reference/kube-scheduler

Services

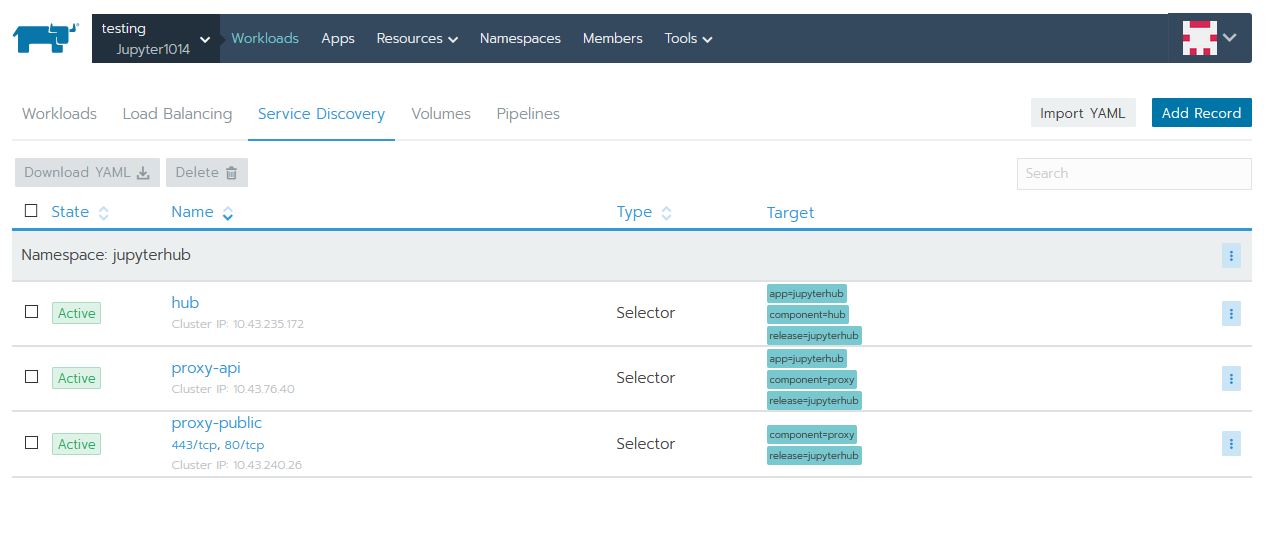

Here is what the Service Discovery tab looks like:

Details of each service:

hub

- Points to the hub deployment API port

- Allows other instances to connect to the Hub's API

proxy-api

- Points to the proxy deployment API port

- Used by hub to configure reverse proxy entries as needed

proxy-public

- Points to the proxy http ports

- Maps the proxy ports to an external IP

Volumes

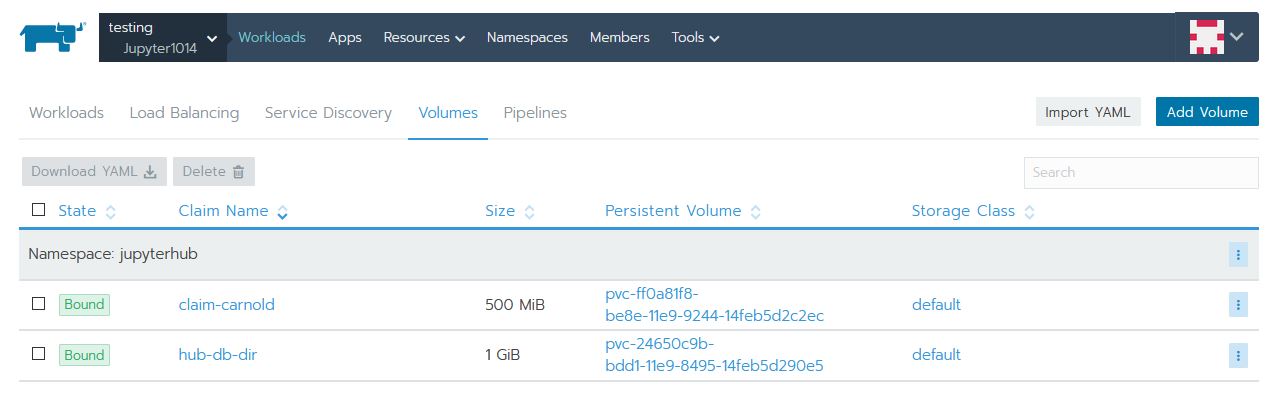

Here is what the Volumes tab looks like:

Details of each volume:

claim-carnold

- This is a [persistent volume claim

- This volume is dedicated to a single-user Jupyter Notebook, specifically, the user carnold

- The purpose of this volume is to persist user's changes to their notebook across nodes and restarts

hub-db-dir

- This is a [persistent volume claim

- This volume is used by the hub deployment to support a sqlite database for configuration.

- The purpose of this volume is to persist DB changes across nodes and restarts